Multimodal Perception and Comprehension of Corner Cases in Autonomous Driving

ECCV 2024 Workshop @ Milano, Italy, Sep 30th Monday

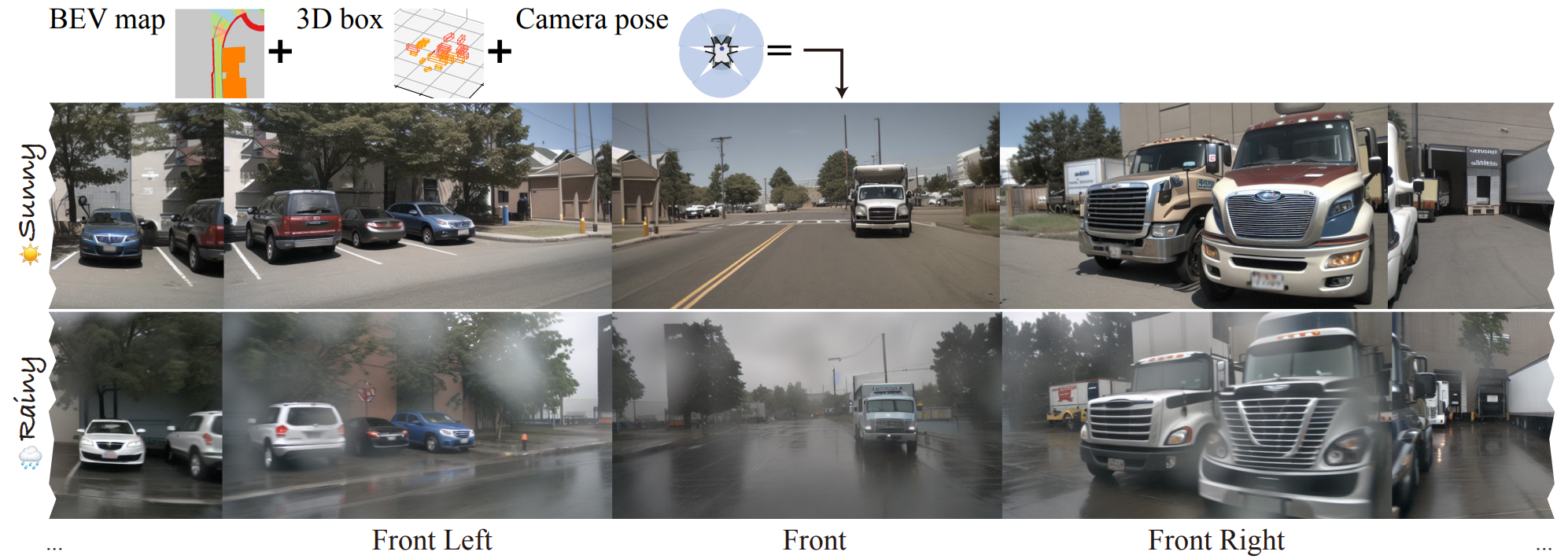

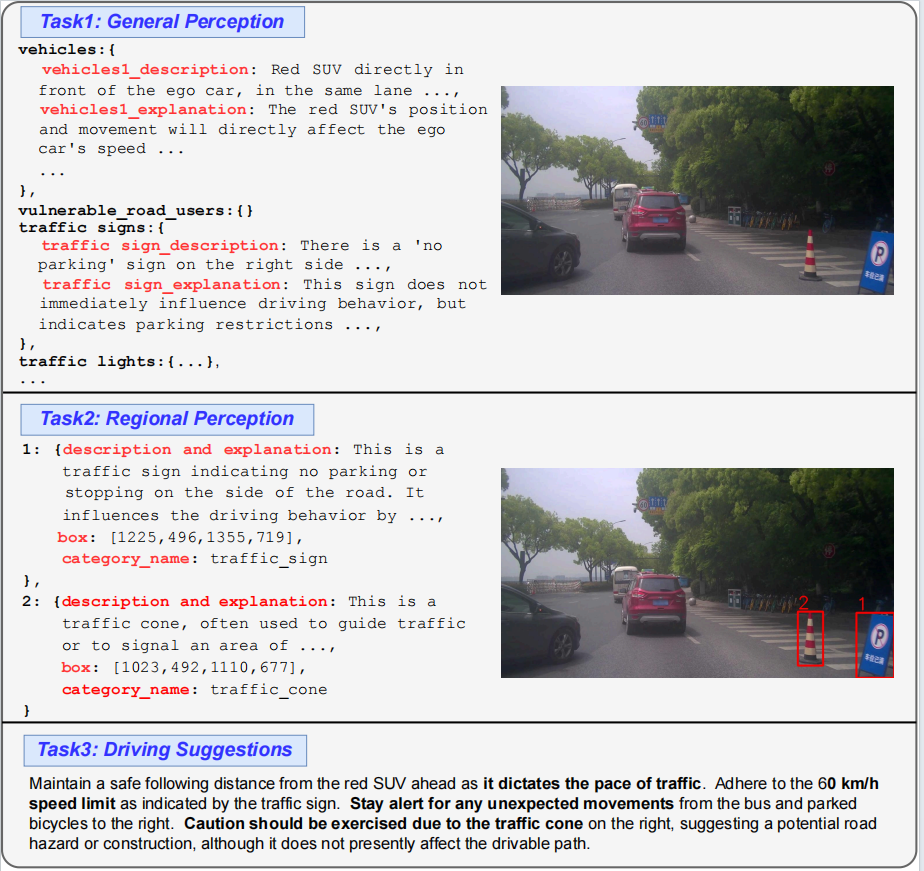

Visual comprehension data samples from CODA-LM.

Introduction

This workshop aims to bridge the gap between state-of-the-art autonomous driving techniques and fully intelligent, reliable self-driving agents, particularly when confronted with corner cases, rare but critical situations that challenge limits of reliable autonomous driving. The advent of Multimodal Large Language Models (MLLMs), represented by GPT-4V, demonstrates the unprecedented capabilities in multimodal perception and comprehension even under dynamic street scenes. However, leveraging MLLMs to tackle the nuanced challenges of self-driving still remains an open field. This workshop seeks to foster innovative research in multimodal perception and comprehension, end-to-end driving systems, and the application of advanced AIGC techniques to autonomous systems. We conduct a challenge comprising two tracks: corner case scene understanding and corner case scene generation. The dual-track challenge is designed to advance reliability and interpretability of autonomous systems in both typical and extreme corner cases.

Challenges

Note: participants are encouraged to submit reports as workshop papers! Check Call for Papers for more details!

Track 1: Corner Case Scene Understanding

This track is designed to enhance perception and comprehension abilities of MLLMs for autonomous driving, focusing on global scene understanding, regional reasoning, and actionable suggestions. With the CODA-LM dataset consisting of 5,000 images with textual descriptions covering global driving scenarios, detailed analyses of corner cases, and future driving recommendation, this track seeks to promote the development of more reliable and interpretable autonomous driving agents.

- Datasets: Please refer to CODA-LM for detailed dataset introduction and dataset downloads.

- Please find more information on the Track1 page.

Track 2: Corner Case Scene Generation

This track focuses on improving the capabilities of diffusion models to create multi-view street scene videos that are consistent with 3D geometric scene descriptors, including the Bird's Eye View (BEV) maps and 3D LiDAR bounding boxes. Building on MagicDrive for controllable 3D video generation, this track aims to advance scene generation for autonomous driving, ensuring better consistency, higher resolution, and longer duration.

- Datasets: Please refer to MagicDrive for detailed dataset introduction and dataset downloads (i.e., nuScenes dataset).

- Please find more information on the Track2 page.

Call for Papers

Overview. This workshop aims to foster innovative research and development in multimodal perception and comprehension of corner cases for autonomous driving, critical for advancing the next-generation industry-level self-driving solutions. Our focus encompasses a broad range of cutting-edge topics, including but not limited to:

- Corner case mining and generation for autonomous driving.

- 3D object detection and scene understanding.

- Semantic occupancy prediction.

- Weakly supervised learning for 3D Lidar and 2D images.

- One/few/zero-shot learning for autonomous perception.

- End-to-end autonomous driving systems with Large Multimodal Models.

- Large Language Models techniques adaptable for self-driving systems.

- Safety/explainability/robustness for end-to-end autonomous driving.

- Domain adaptation and generalization for end-to-end autonomous driving.

Submission tracks. All submissions should be anonymous, and reviewing is double-blind. We encourage two types of submissions:

-

Full workshop papers not previously published or accepted for publication in the substantially

similar form in any peer-reviewed venues including journals, conferences or workshops. Papers are limited

to 14 pages, including both figures and tables, in ECCV format with extra pages containing only cited

references allowed. Accepted papers will be part of the official ECCV proceedings.

[Download LaTex Template]

[OpenReview Submission Site] -

Extended abstracts not previously published or accepted for publication in substantially similar

form in any other peer-reviewed venues including journals, conferences or workshops. Papers are limited to

4 pages, and will NOT be included in official ECCV proceedings (non-archival), which are allowed for

re-submission to later conferences.

[Download LaTex Template]

[OpenReview Submission Site]

Important Dates (AoE Time, UTC-12)

| Challenge Open to Public | June 15, 2024 |

| Challenge Submission Deadline | Aug 15, 2024 11:59 PM |

| Challenge Notification to Winner | Sep 1, 2024 |

| Full Paper Submission Deadline | Aug 1, 2024 11:59 PM |

| Full Paper Notification to Authors | Aug 10, 2024 11:59 PM |

| Full Paper Camera Ready Deadline | Aug 15, 2024 11:59 PM |

| Abstract Paper Submission Deadline | Sep 1, 2024 11:59 PM |

| Abstract Paper Notification to Authors | Sep 7, 2024 11:59 PM |

| Abstract Paper Camera Ready Deadline | Sep 10, 2024 11:59 PM |

Schedule (Milano Time, UTC+2)

| Opening Remarks, Welcome, and Challenge Summary | 08:50 AM - 09:00 AM |

| Invited Talk 1: TBD | 09:00 AM - 09:45 AM |

| Invited Talk 2: TBD | 09:45 AM - 10:30 AM |

| Poster Session and Coffee Break | 10:30 AM - 11:00 AM |

| Invited Talk 3: TBD | 11:00 AM - 11:45 AM |

| Invited Talk 4: TBD | 11:45 AM - 12:30 AM |

| Oral Talk 1: Winner of Track 1 | 12:30 AM - 12:40 AM |

| Oral Talk 2: Winner of Track 2 | 12:40 AM - 12:50 AM |

| Awards & Future Plans | 12:50 AM - 13:00 AM |

Organizers

HKUST

CUHK

Huawei

Huawei

DUT

TU Delft

Huawei

Huawei

Huawei

Huawei

Huawei

CUHK

DUT